Selected Publications

(* equal contribution, † corresponding author.)

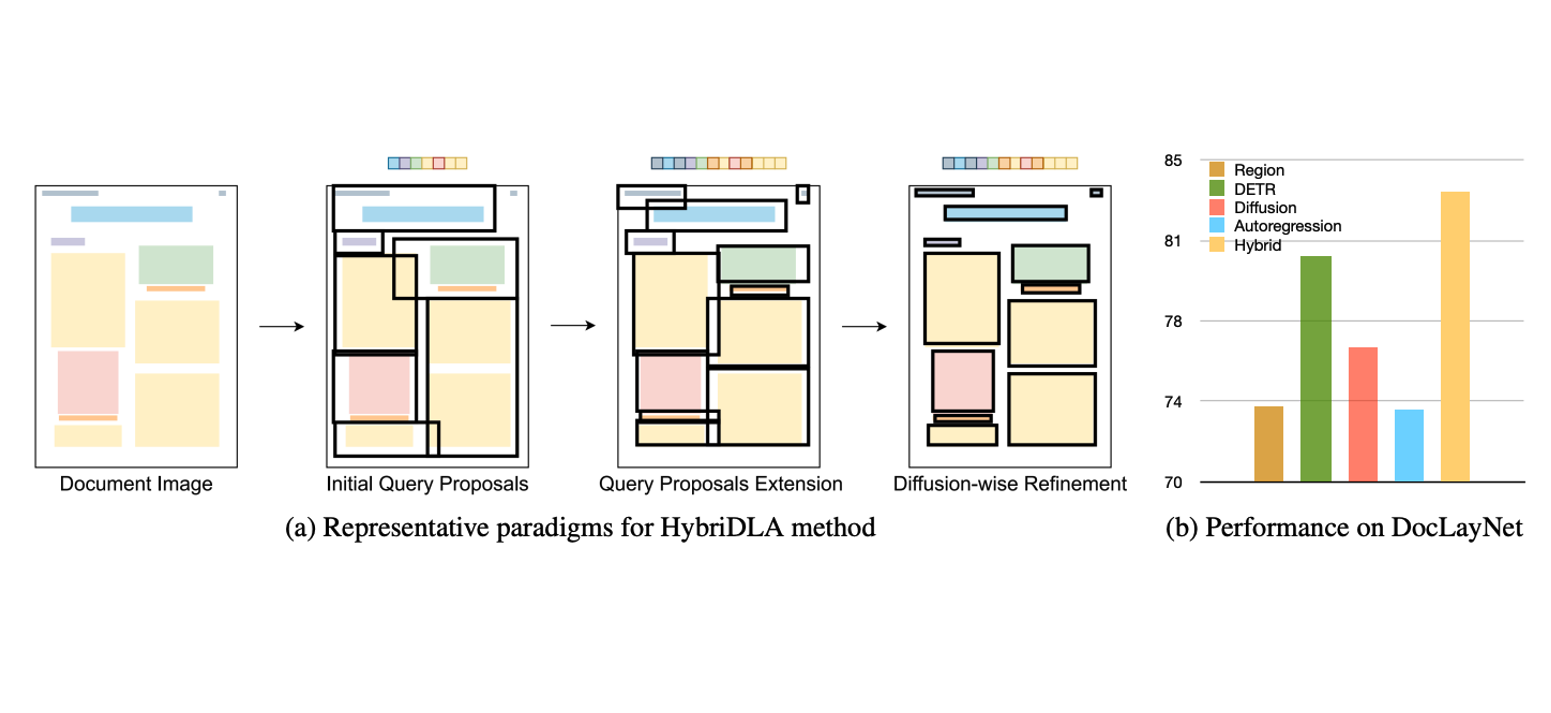

Y. Chen, O. Moured, R. Liu, J. Zheng, K. Peng, J. Zhang†, R. Stiefelhagen

AAAI 2026 (🏆 Oral) Project page Paper Code

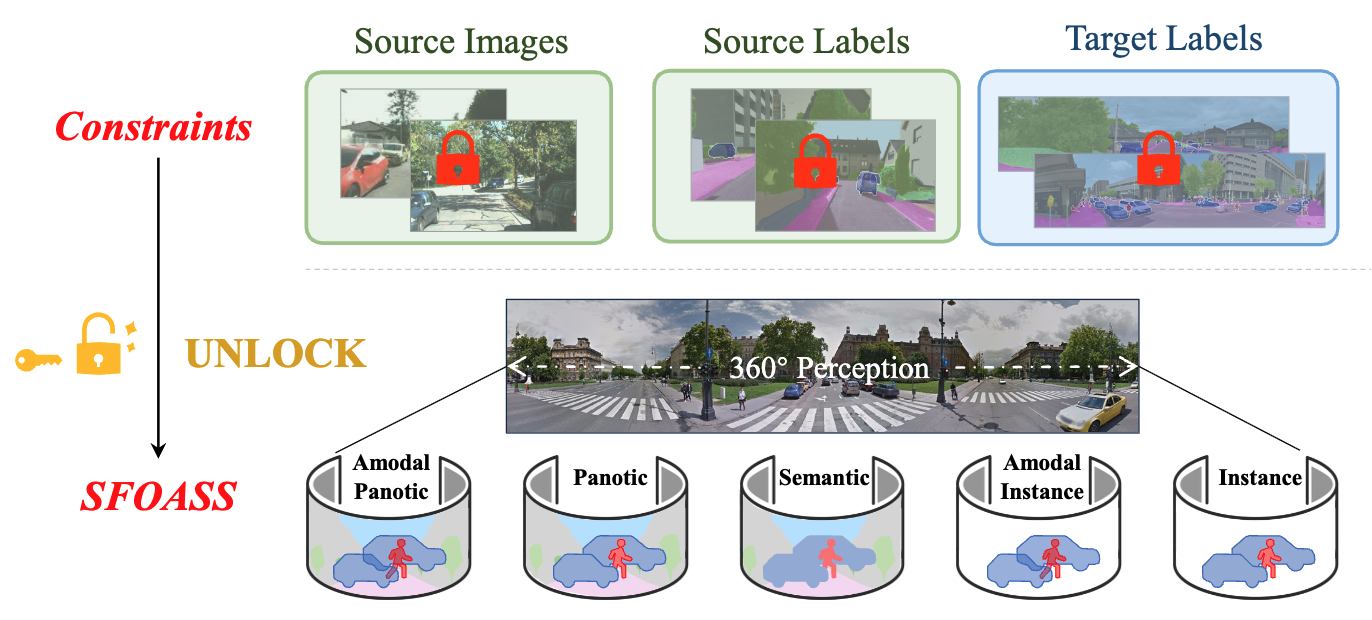

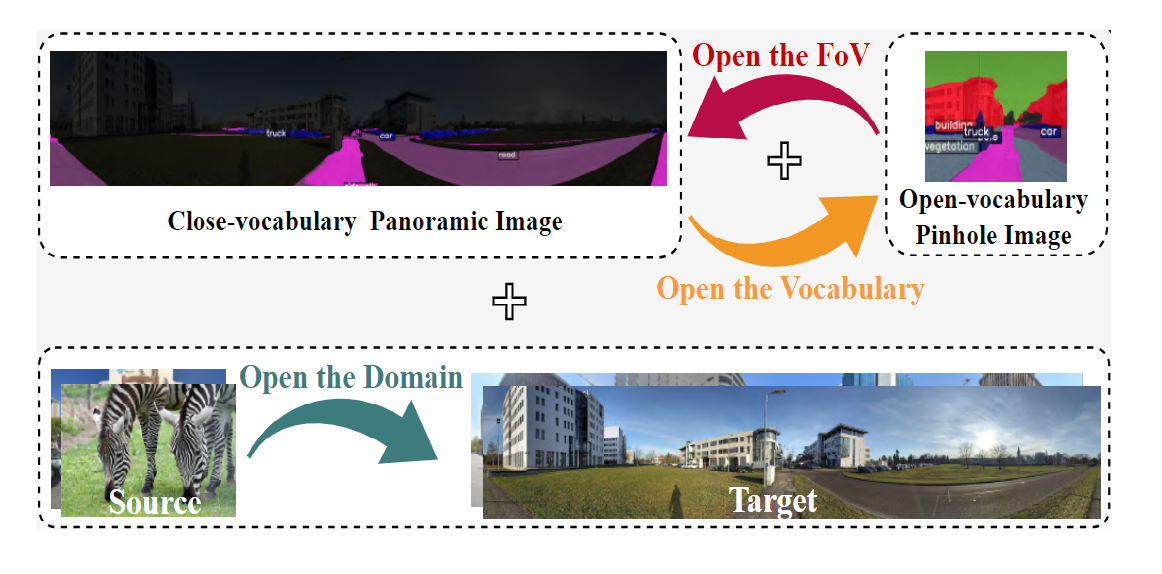

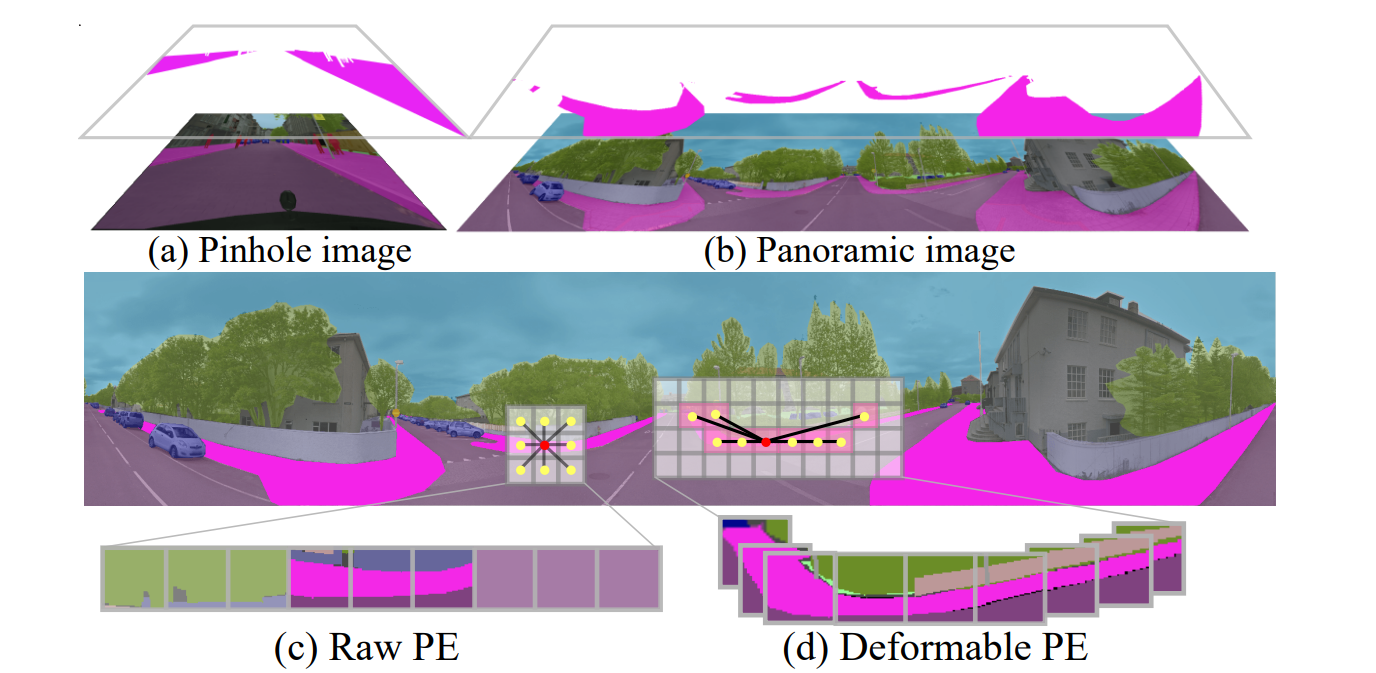

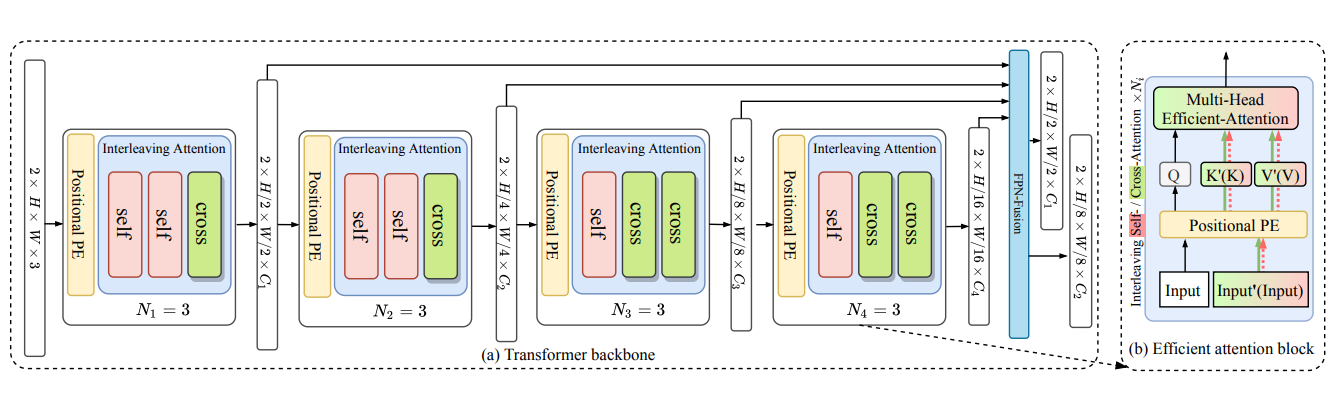

J. Zheng, R. Liu, Y. Chen, Z. Chen, K. Yang, J. Zhang†, R. Stiefelhagen

CVPR 2025 Project page Paper Code

C. Wu, Y. Wan*, H. Fu*, J. Pfrommer, Z. Zhong, J. Zheng†, J. Zhang, J. Beyerer

CVPR 2025 Project page Paper Code

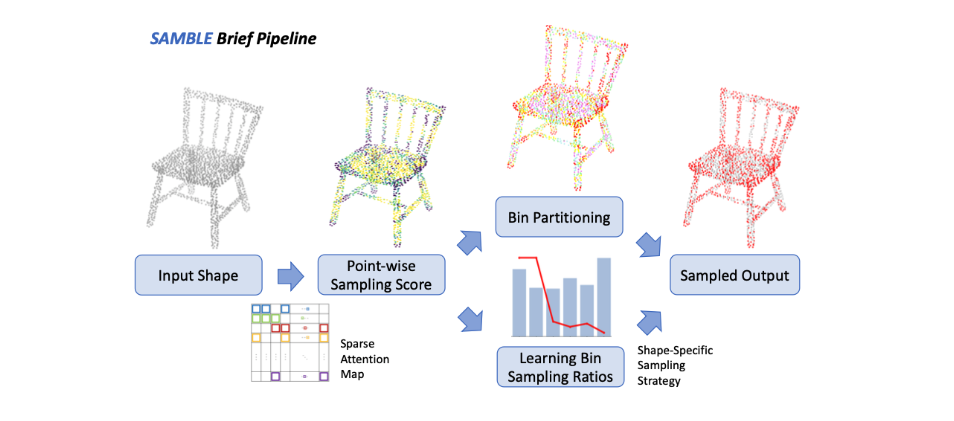

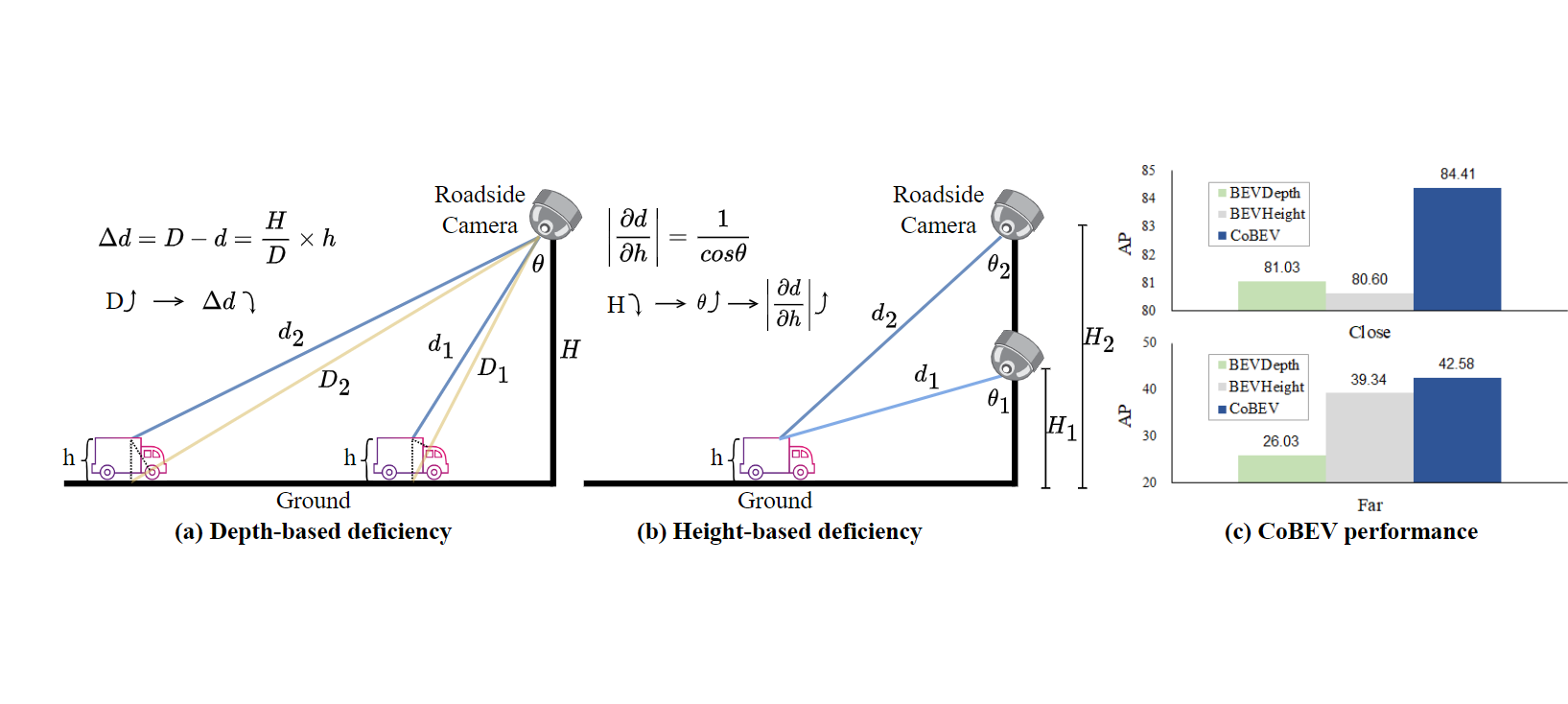

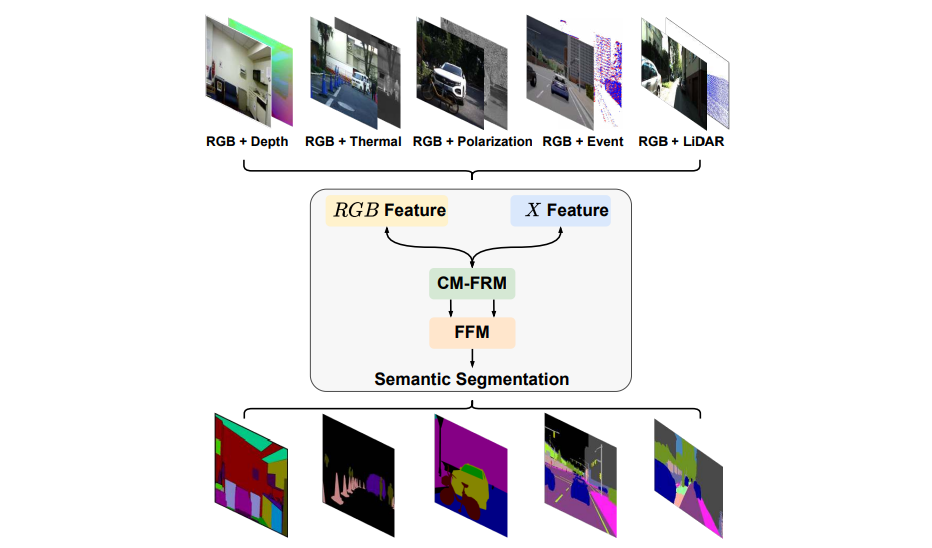

Y. Chen, R. Liu, J. Zheng, D. Wen, K. Peng, J. Zhang†, R. Stiefelhagen

ICLR 2025 Project page Paper Code

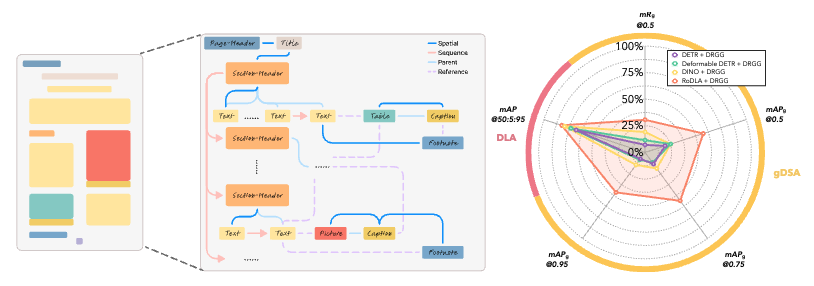

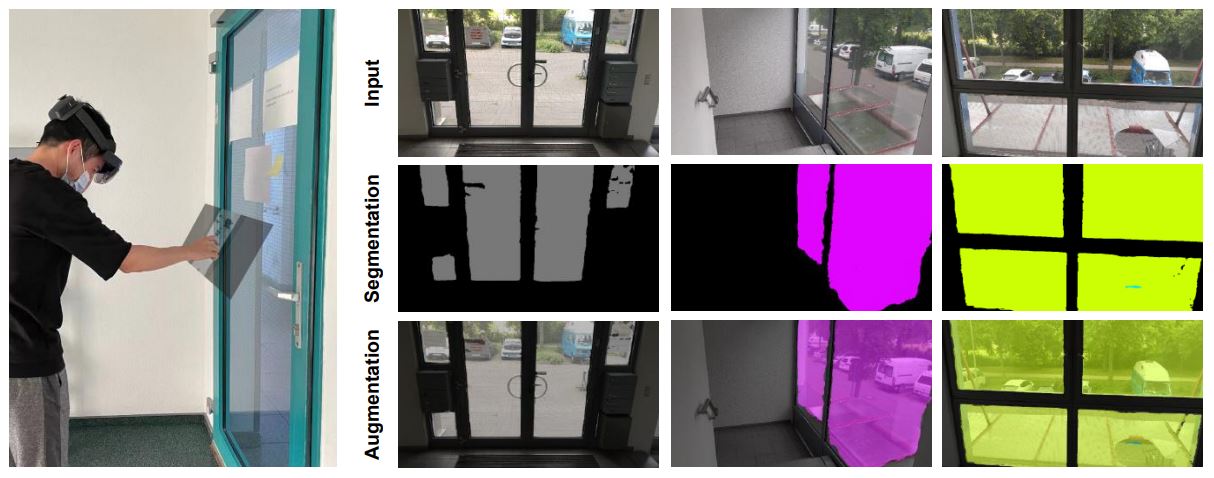

X. Jiang*, J. Zheng*, R. Liu, J. Li, J. Zhang†, S. Matthiesen, R. Stiefelhagen

WACV 2025 Project page Paper Code

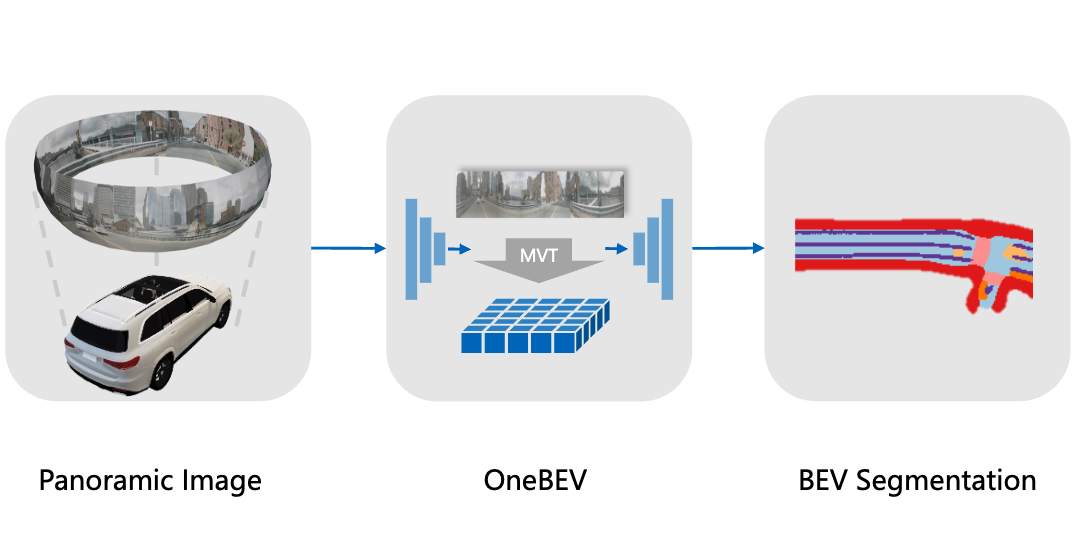

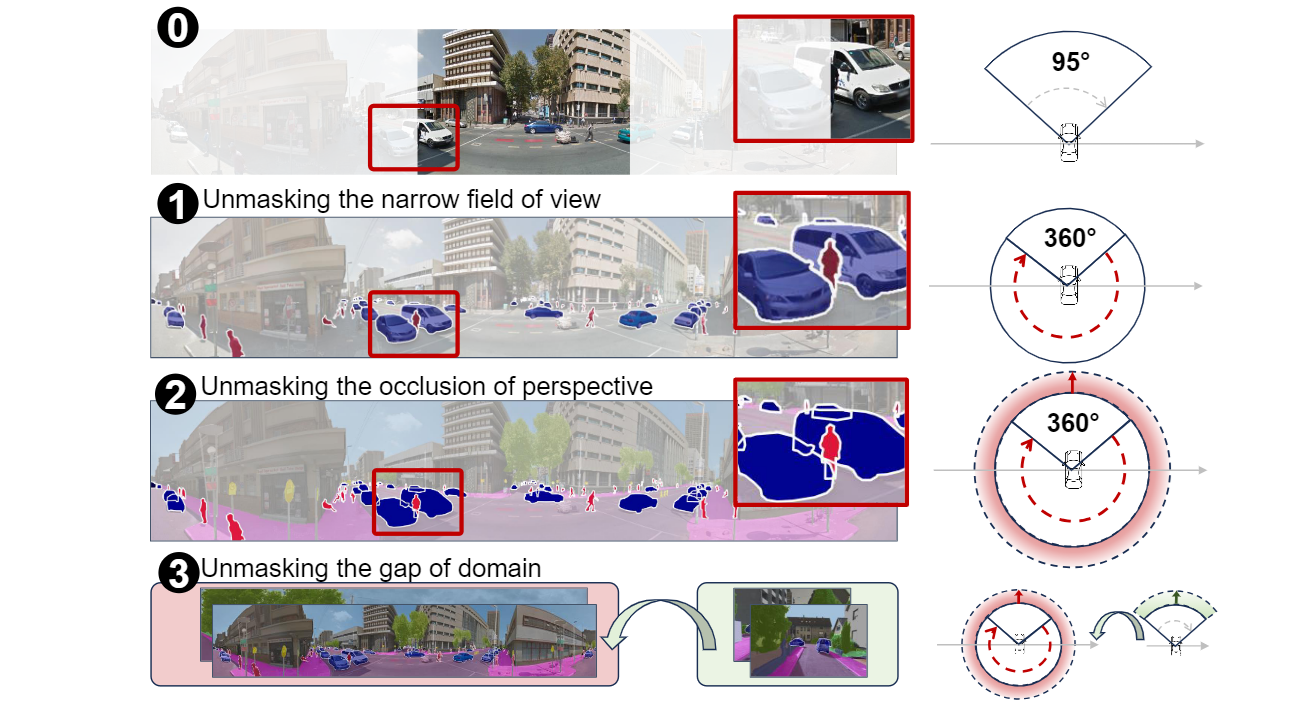

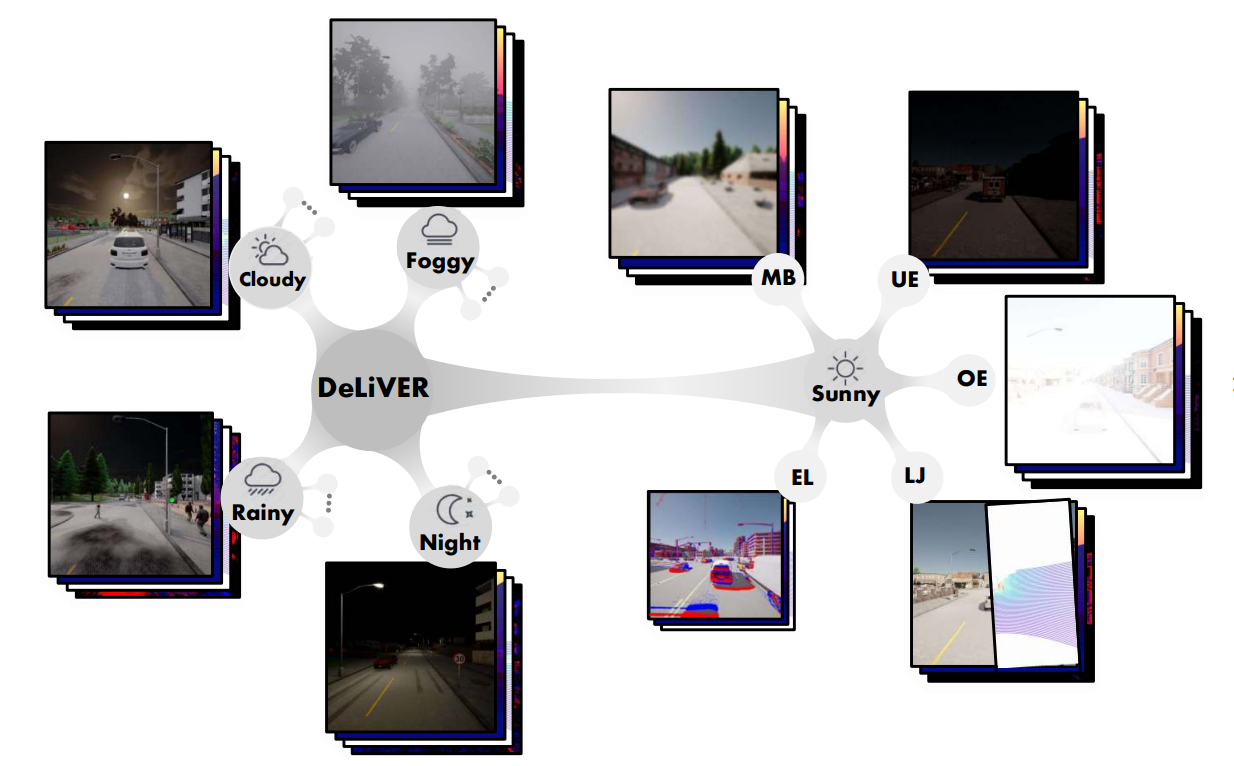

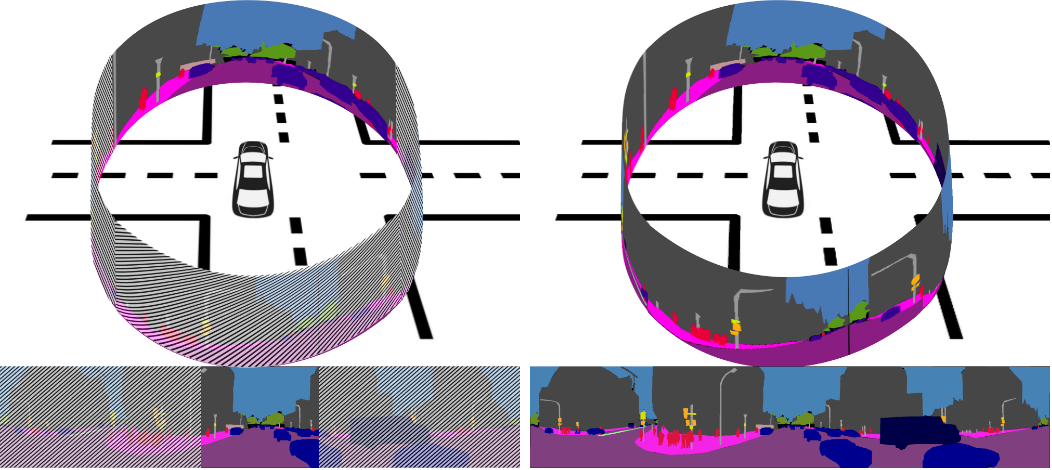

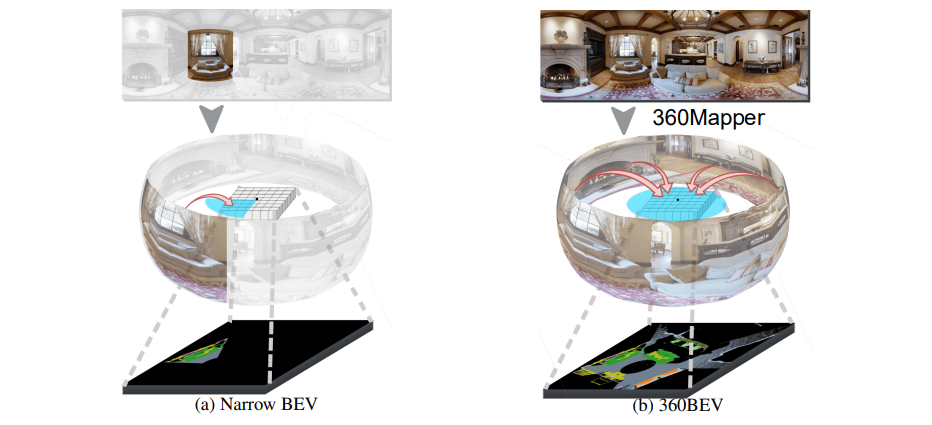

J. Zheng, R. Liu, Y. Chen, K. Peng, C. Wu, K. Yang, J. Zhang†, R. Stiefelhagen.

ECCV 2024 Project page Paper Code

Y. Chen, J. Zhang†, K. Peng, J. Zheng, R. Liu, P. Torr, R. Stiefelhagen.

CVPR 2024 Project page Paper Code

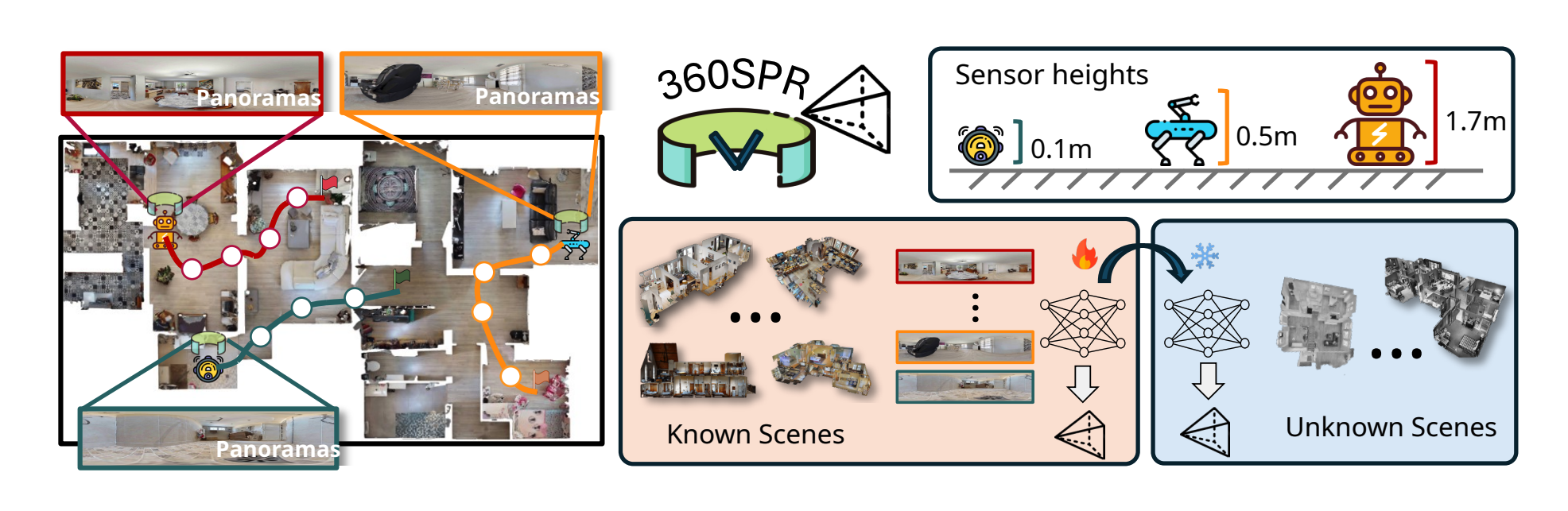

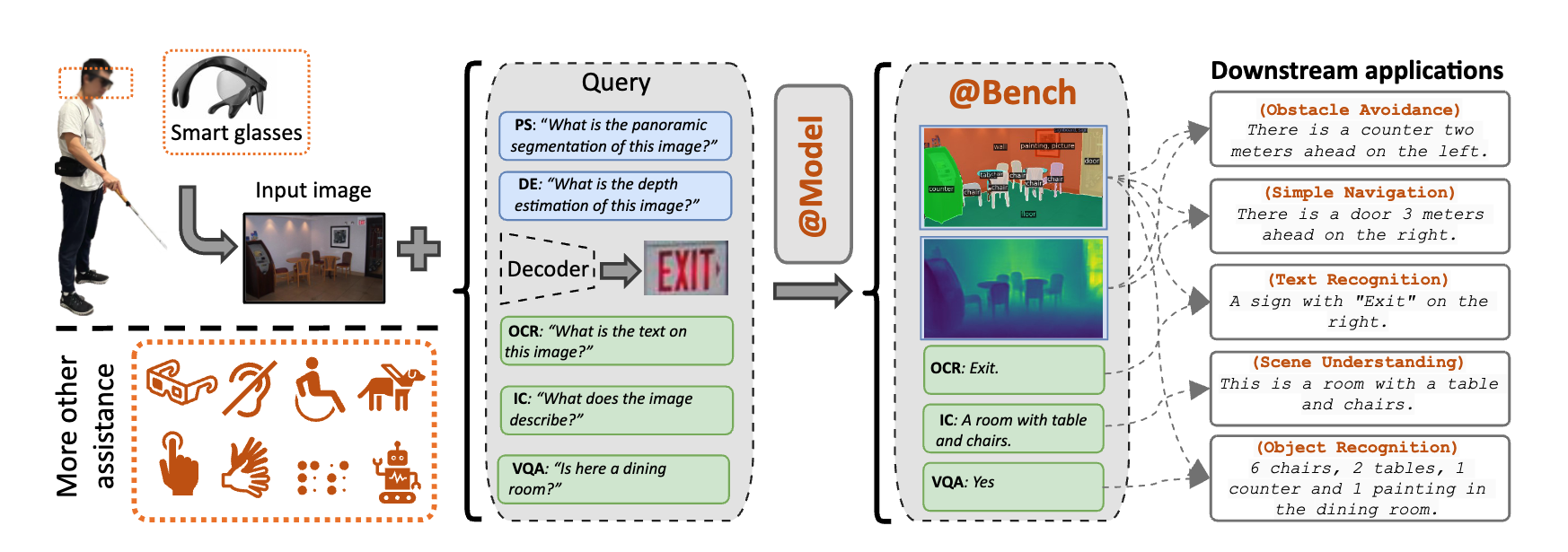

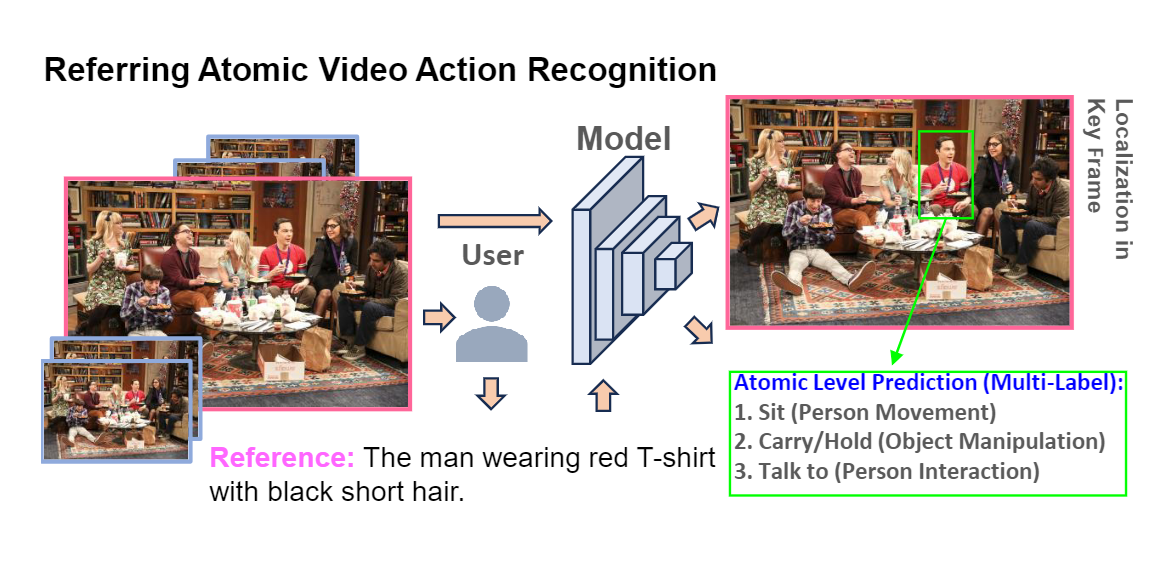

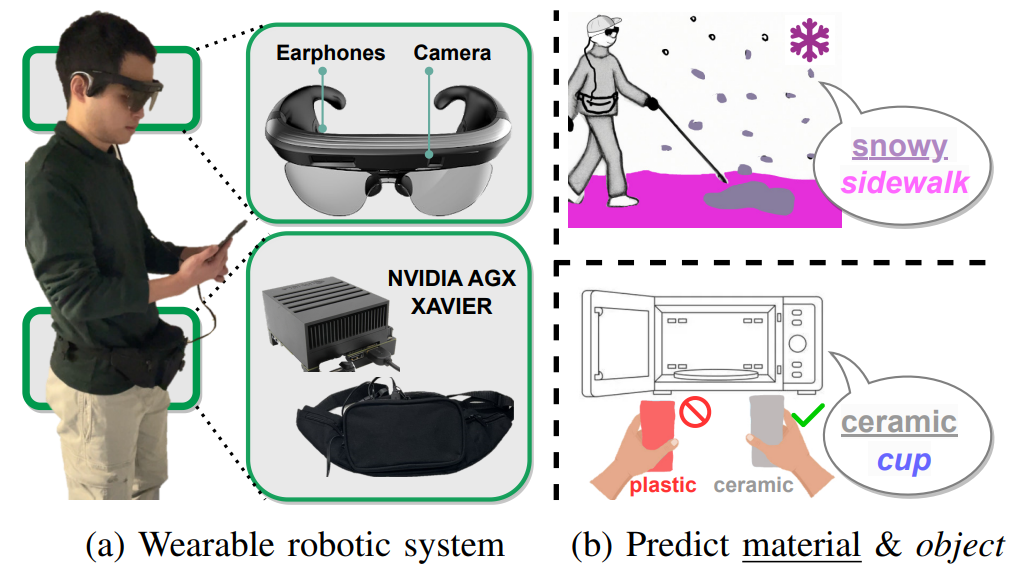

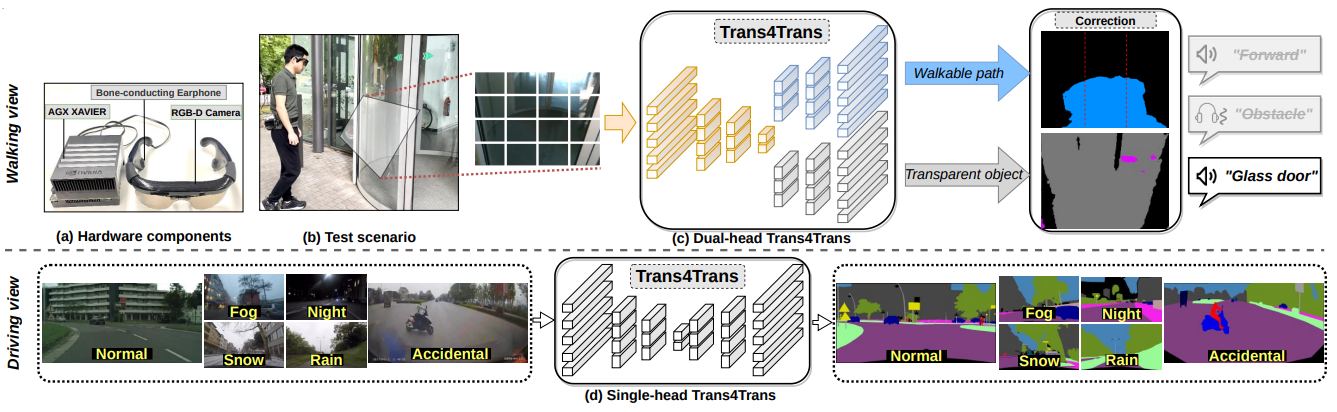

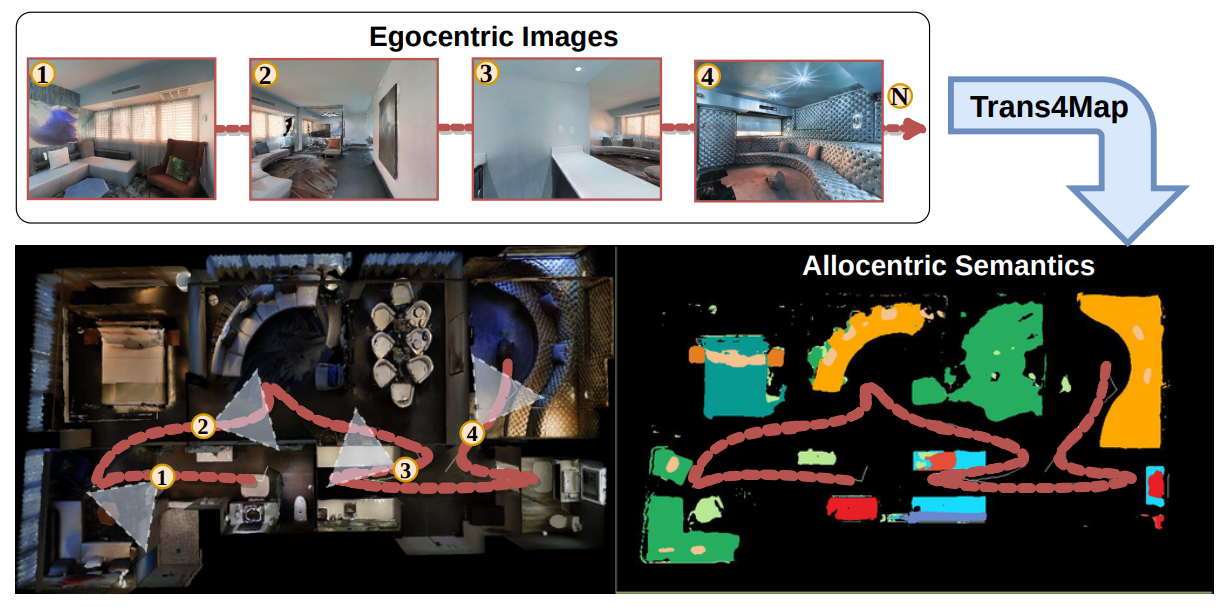

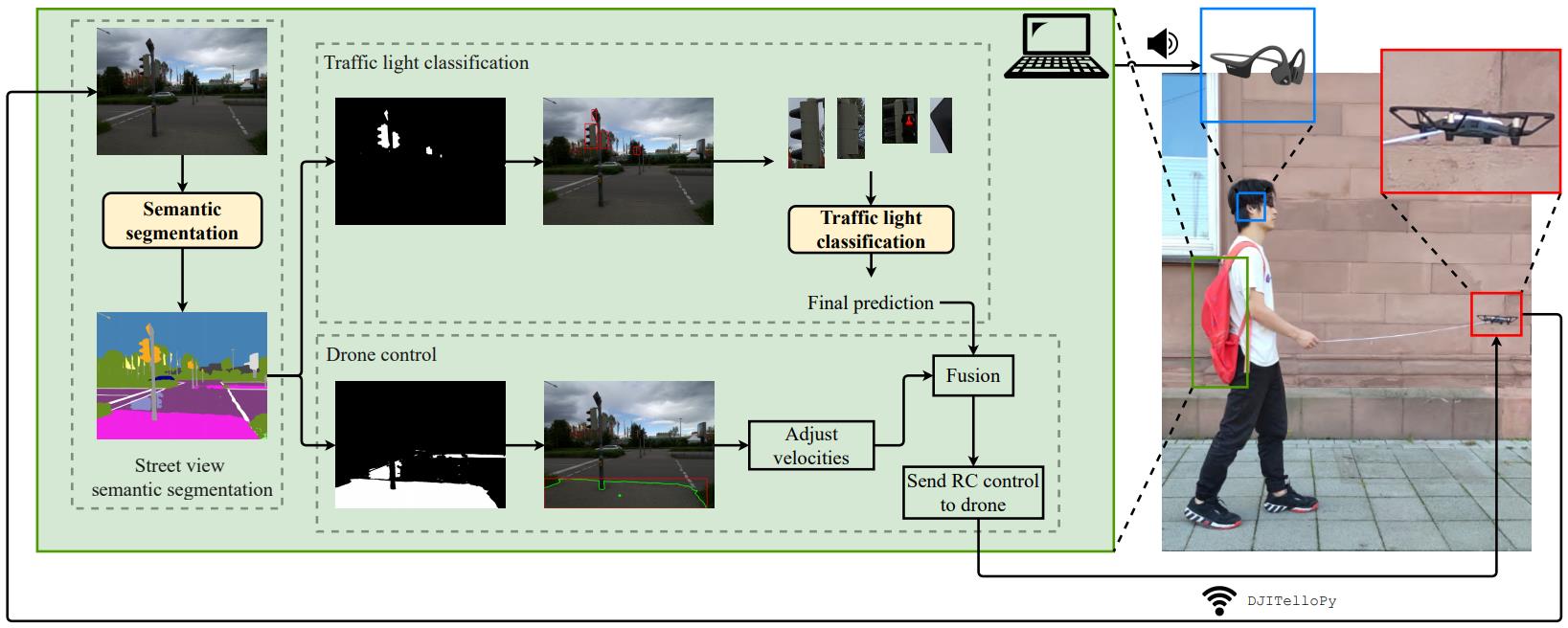

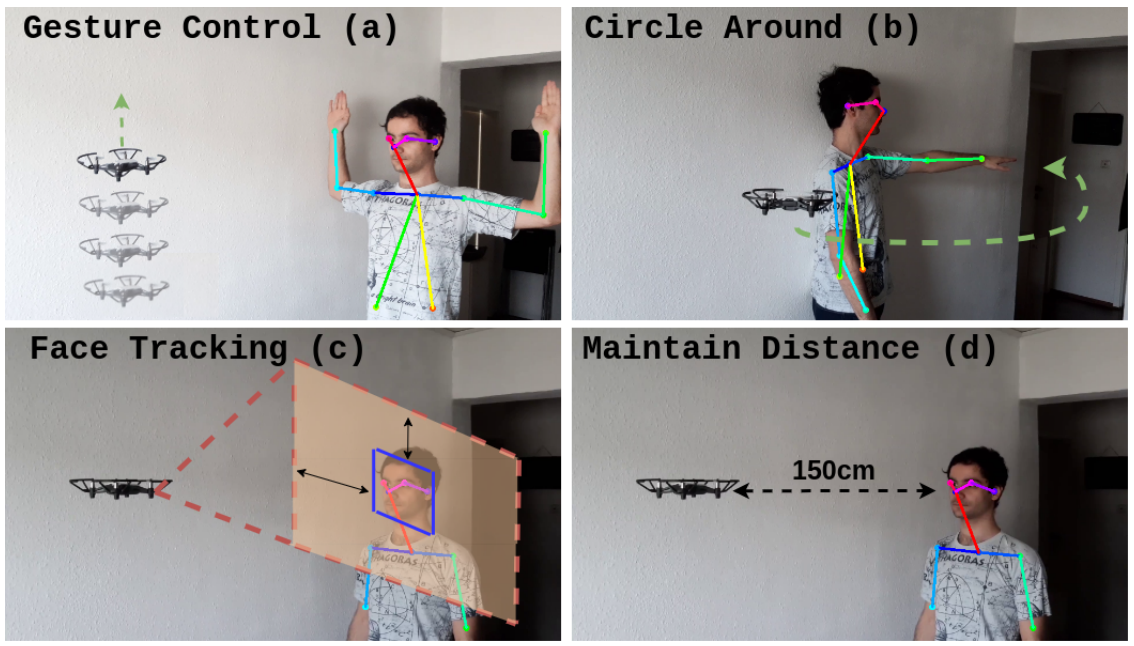

J. Zheng*, J. Zhang*, K. Yang, K. Peng, R. Stiefelhagen.

ICRA 2024 (🏆 Best paper finalist on HRI) Project page Paper Code

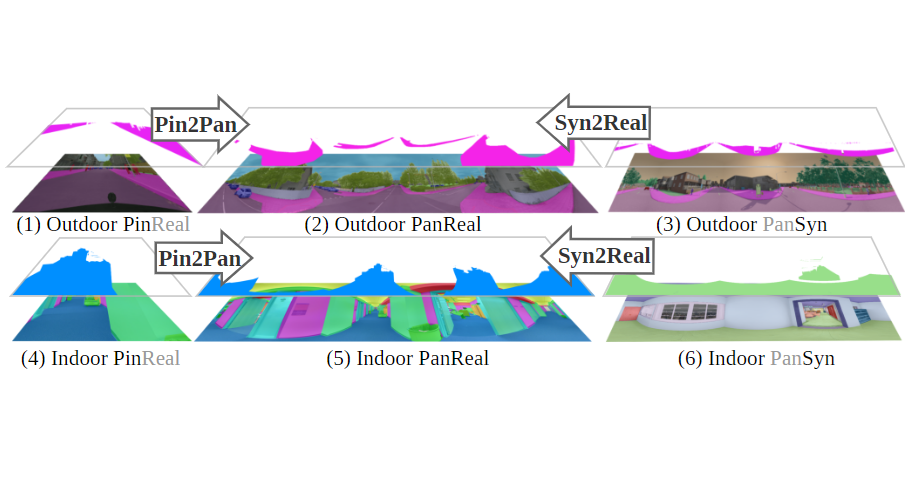

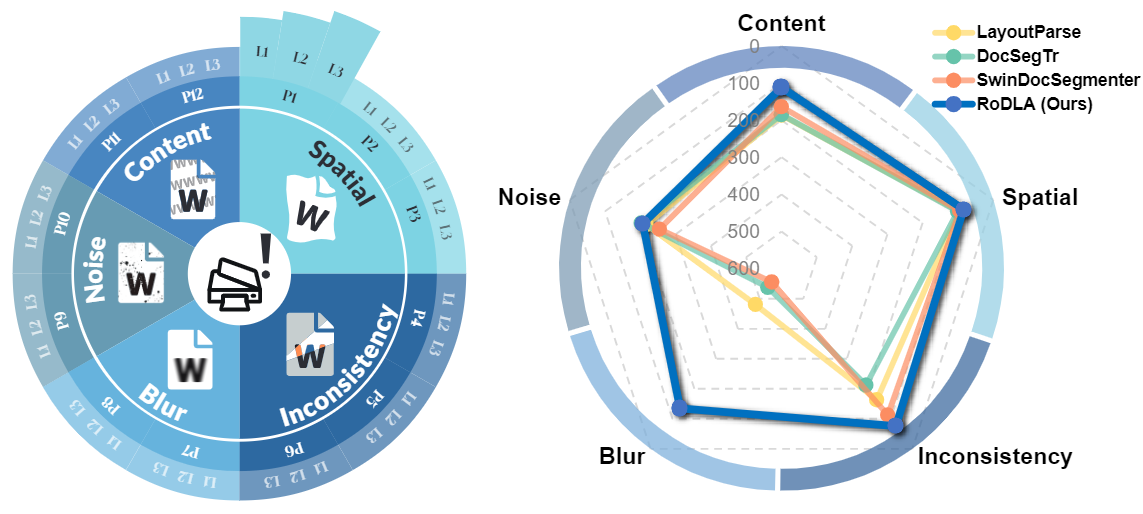

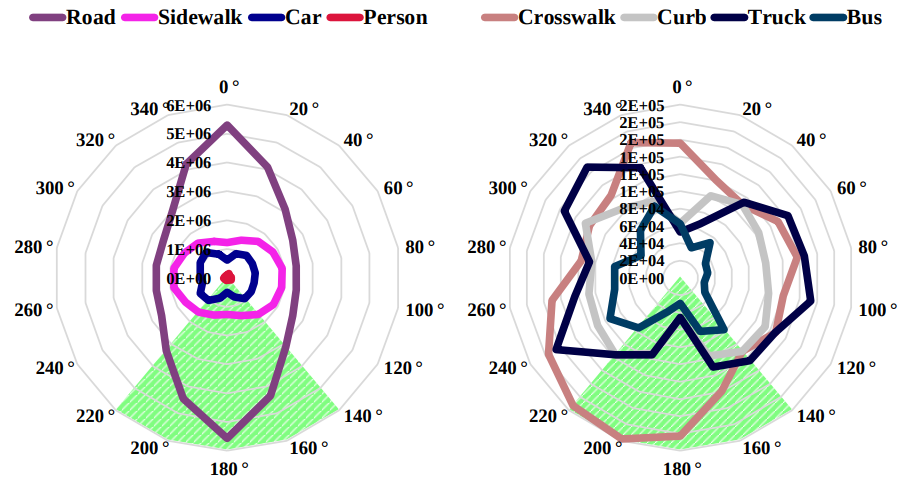

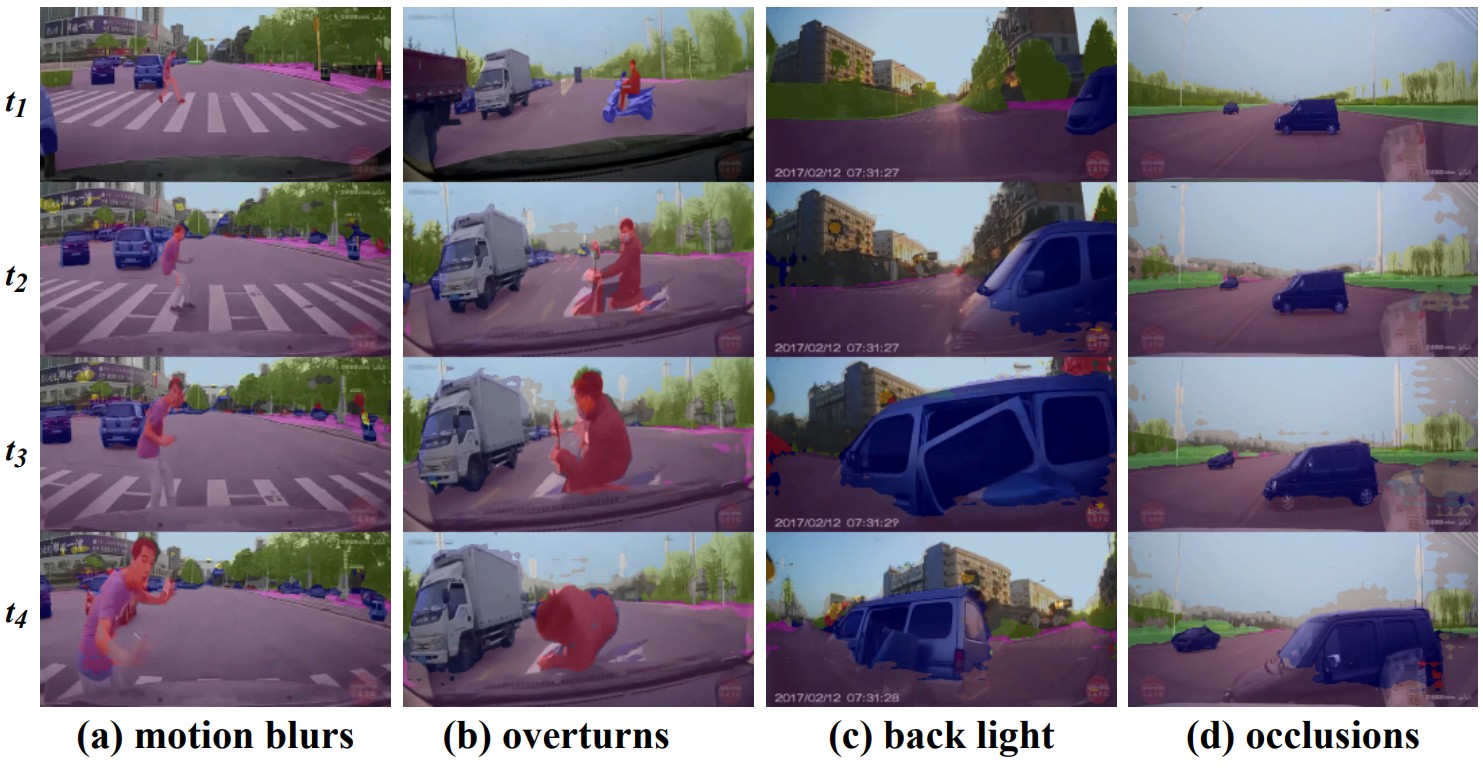

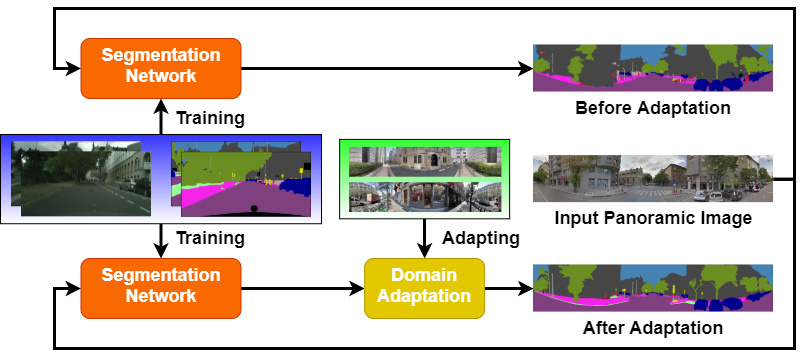

J. Zhang*, R. Liu*, S. Hao, K. Yang, S. Reiß, K. Peng, H. Fu, K. Wang, R. Stiefelhagen.

CVPR 2023 Project page Paper Code Dataset

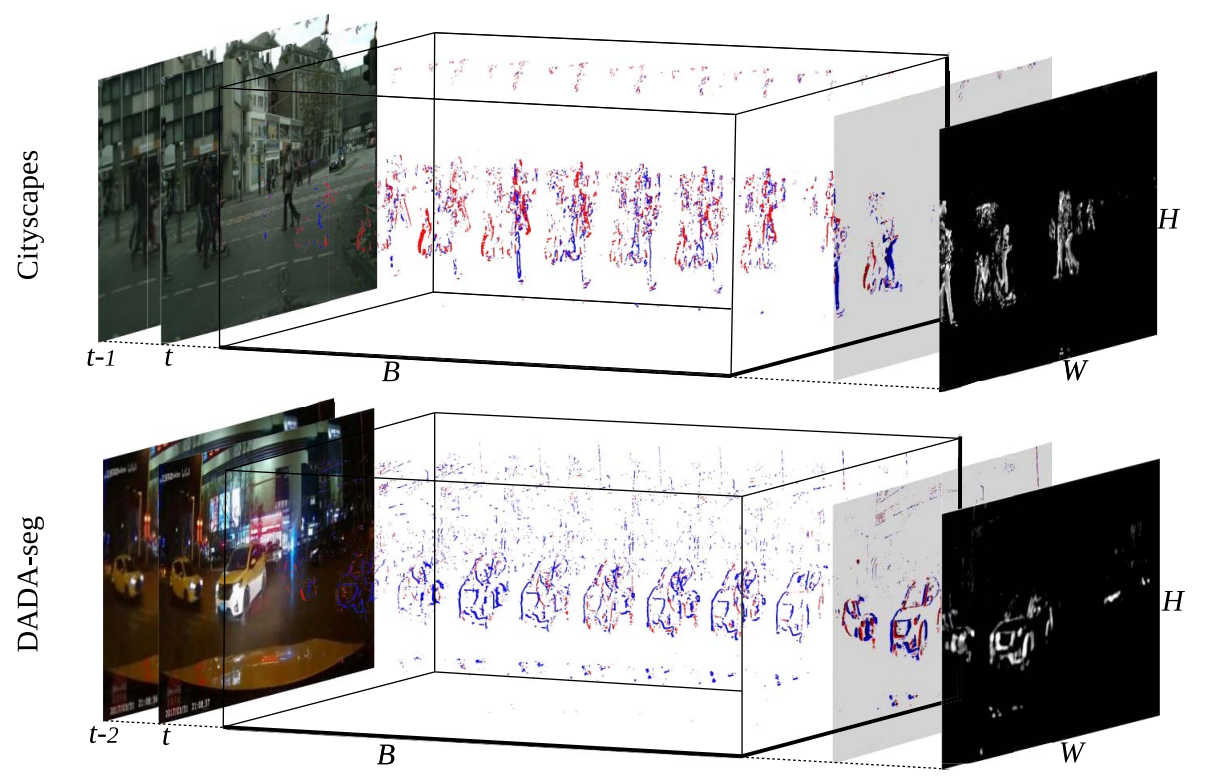

Z. Teng*, J. Zhang*†, K. Yang, K. Peng, H. Shi, S. Reiß, K. Cao, R. Stiefelhagen.

WACV 2024 Project page Paper Code Dataset

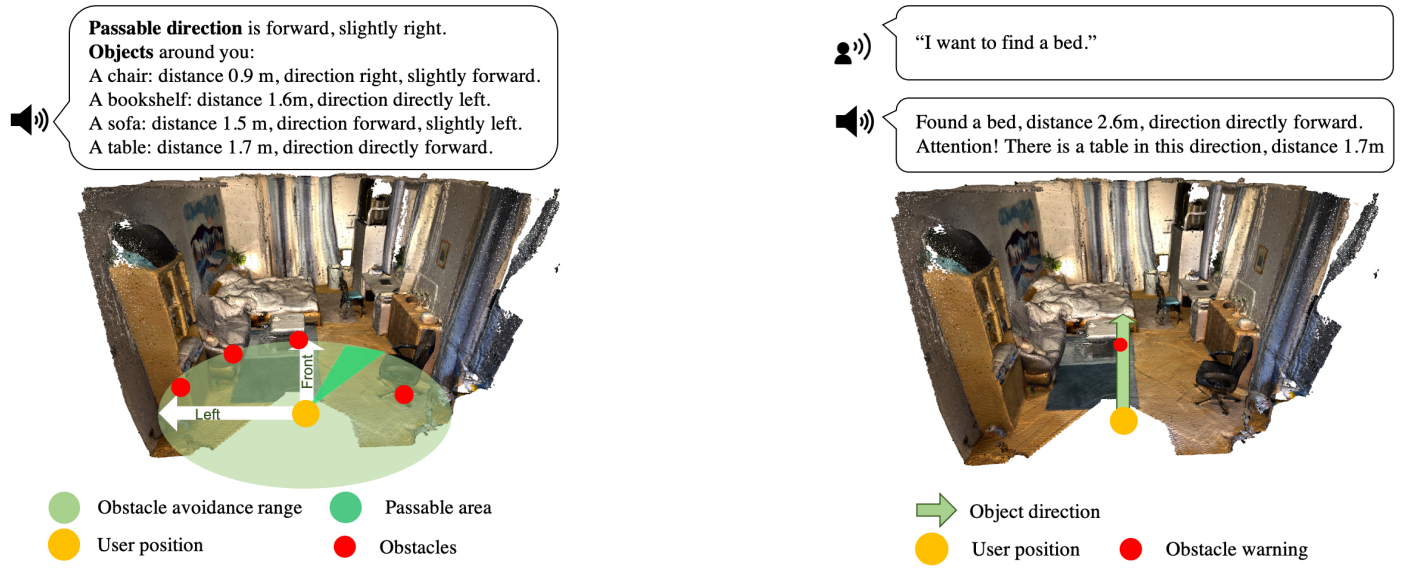

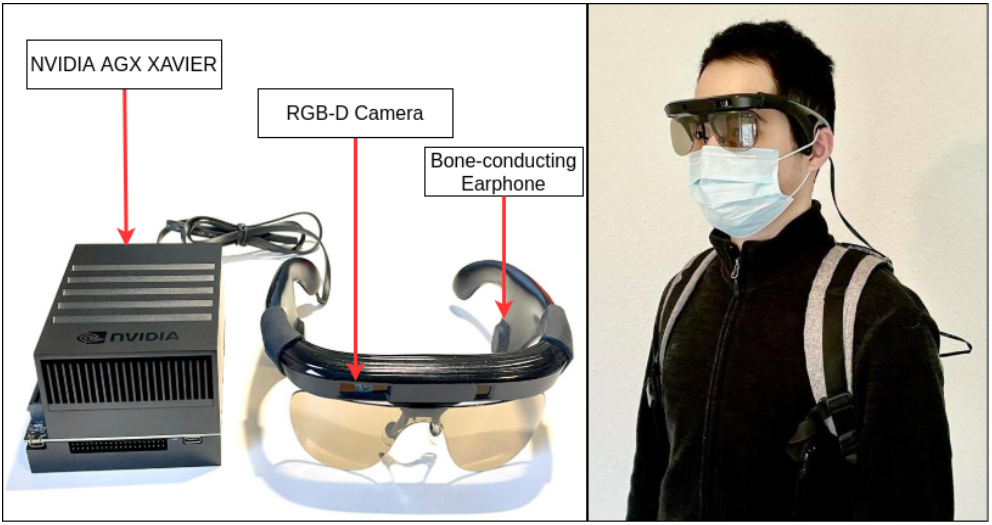

H. Liu, R. Liu, K. Yang, J. Zhang, K. Peng, R. Stiefelhagen

ICCV Workshop on Assistive Computer Vision and Robotics ( ACVR) 2021 Paper

Y. Zhang, H. Chen, K. Yang, J. Zhang, R. Stiefelhagen

IEEE RCAR 2021 Paper